Google Trends is a goldmine. It shows what the world is searching for right now. Marketers love it. Researchers rely on it. Curious developers dig into it for fun. But scraping Google Trends data with Python can feel tricky at first. Errors pop up. Requests fail. Data comes back empty. Don’t worry. You can fix all of that.

TLDR: Scraping Google Trends with Python is possible and useful, but common errors can break your script fast. Most problems come from rate limits, bad parameters, or outdated libraries. Using tools like pytrends, handling retries, and validating inputs will save you hours of frustration. Small fixes make a big difference.

Why Scrape Google Trends?

Before we dive into errors, let’s talk about the “why.”

- Track keyword popularity over time.

- Compare search terms side by side.

- Spot seasonal trends in seconds.

- Generate content ideas backed by real data.

Manual searches are fine. But automation is better. Python lets you pull data regularly. Daily. Hourly. Even every few minutes if needed.

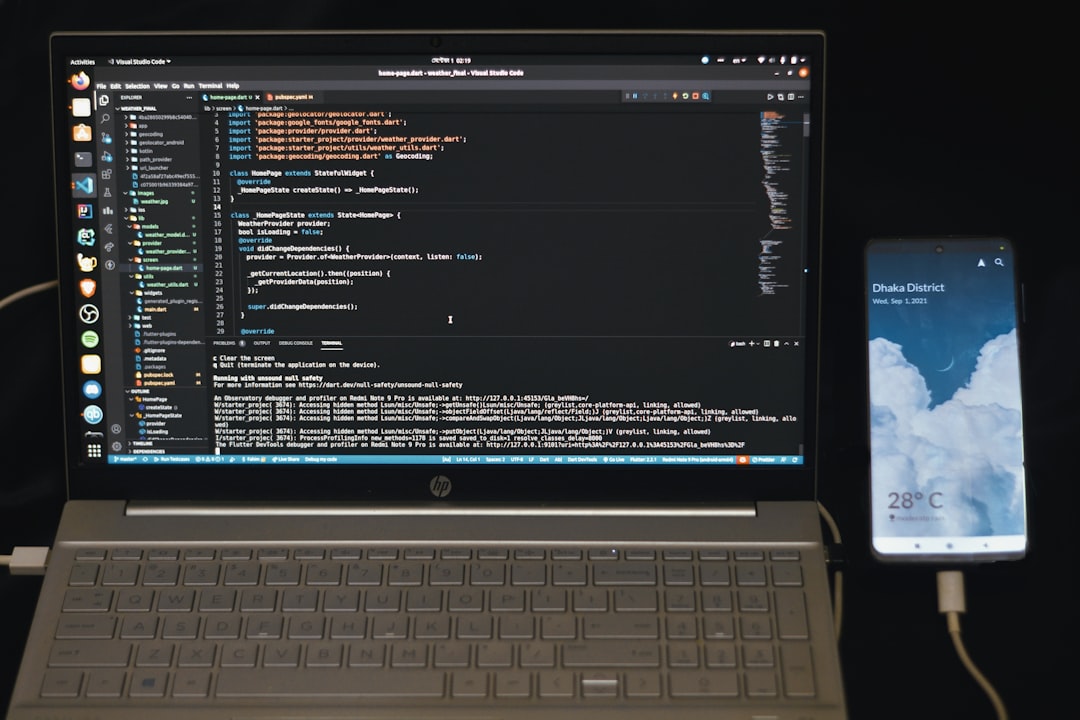

The Simplest Way: Using Pytrends

Scraping Google Trends directly with raw HTTP requests is messy. Google uses tokens. Cookies. Dynamic parameters. It changes often.

The safer route? Use pytrends. It is an unofficial Google Trends API for Python.

Install it with:

pip install pytrends

Basic example:

from pytrends.request import TrendReq

pytrends = TrendReq()

pytrends.build_payload([‘Python’])

data = pytrends.interest_over_time()

print(data.head())

Looks simple. And it usually is. Until it breaks.

Common Error #1: Too Many Requests (429 Error)

This one is very common. You send too many requests in a short time. Google blocks you.

You may see:

- Response code 429

- Empty dataframe

- Connection error

Why It Happens

- No delay between requests

- Sending multiple keyword batches too fast

- Using the same IP repeatedly

Reliable Fix

Add delays. Yes. Simple as that.

import time

time.sleep(10)

Wait 5 to 15 seconds between calls. Rotate proxies if you must scale. But go slow if possible. Google does not like aggressive scraping.

You can also use retries:

from requests.adapters import HTTPAdapter

from requests.packages.urllib3.util.retry import Retry

This helps your script recover automatically instead of crashing.

Common Error #2: Empty DataFrame Returned

This one is sneaky. No error appears. But your dataset is empty.

You expect numbers. You get nothing.

Why It Happens

- Keyword has low search volume

- Incorrect timeframe format

- Geo parameter is invalid

Reliable Fix

Check your parameters carefully.

For example, timeframe must look like:

- ‘today 12-m’

- ‘now 7-d’

- ‘2024-01-01 2024-12-31’

If the keyword is too obscure, Google may not return data. Try broader terms first. Test inside the browser. If it shows no chart there, your script won’t either.

Always verify:

if data.empty:

print(“No data returned”)

Common Error #3: Token or Cookie Issues

Google Trends uses tokens behind the scenes. Pytrends handles this for you. Mostly. But sometimes sessions expire.

You may see:

- JSON decode errors

- Unexpected response format

- 403 forbidden errors

Reliable Fix

Reinitialize your connection.

pytrends = TrendReq(hl=’en-US’, tz=360)

Set headers properly. Define language and timezone. It reduces odd responses.

Also keep pytrends updated:

pip install –upgrade pytrends

Old versions break fast when Google changes something internally.

Common Error #4: Incorrect Geo Codes

You want US data. Or UK data. Or maybe Japan.

But you pass random strings.

Example mistake:

- Using “USA” instead of “US”

- Using lowercase country codes

Reliable Fix

Use ISO two-letter country codes.

- US

- GB

- JP

- DE

Example:

pytrends.build_payload([‘Python’], geo=’US’)

Small detail. Big difference.

Common Error #5: Data Scaling Confusion

This is not a technical error. But it confuses people all the time.

Google Trends data is scaled 0 to 100. It is not raw search volume.

- 100 = peak popularity

- 50 = half of peak

- 0 = low or insufficient data

If you compare keywords in separate requests, each gets its own 0–100 scale. That means you cannot compare them directly.

Reliable Fix

Compare keywords in the same payload:

pytrends.build_payload([‘Python’, ‘Java’], timeframe=’today 12-m’)

This ensures proper relative scaling.

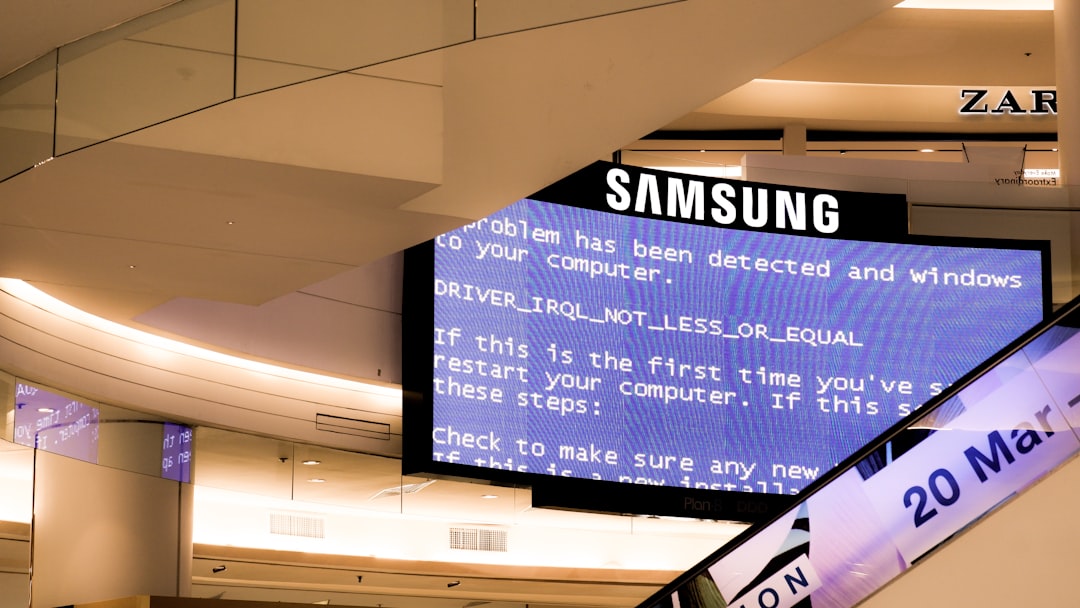

Common Error #6: Blocking Due to Automation Detection

Google detects bots. If your script runs on a server and hits Trends frequently, you may get blocked.

Symptoms:

- CAPTCHA page returned

- HTML instead of JSON

- Sudden consistent failures

Reliable Fix

- Add random sleep intervals

- Use rotating proxies carefully

- Mimic realistic browsing patterns

Do not hammer the endpoint every second. Be polite. Stable automation is slow automation.

Best Practices for Stable Scraping

Want fewer errors? Follow these habits:

- Batch keywords smartly. Google allows up to 5 per request.

- Log everything. Store errors in a file.

- Validate inputs. Check timeframe and geo before calling API.

- Cache results. Avoid repeating identical requests.

- Use try-except blocks. Never let your script crash.

Example error handling:

try:

data = pytrends.interest_over_time()

except Exception as e:

print(“Error:”, e)

Simple. Powerful. Necessary.

When You Should Not Scrape

Sometimes scraping is the wrong tool.

- If you need high-frequency real-time data.

- If you need exact search volumes.

- If legal compliance is critical.

Google Trends is sampled data. It is great for patterns. Not perfect numbers.

Debugging Checklist

When something breaks, check this list:

- Is pytrends updated?

- Are you sending requests too fast?

- Are geo codes correct?

- Is timeframe formatted correctly?

- Does the keyword show data in the browser?

- Are you handling empty responses?

Most issues come from one of these.

Making It Fun: Automate Trend Alerts

Once your scraping works, do something cool.

Create:

- Email alerts for rising keywords.

- A dashboard using Streamlit.

- A weekly trend report PDF.

You can detect spikes like this:

if data[‘Python’].iloc[-1] > 80:

print(“Python is trending!”)

Now your script becomes your trend radar.

Final Thoughts

Scraping Google Trends with Python is not hard. It just requires patience. Most errors are small. Rate limits. Bad parameters. Old libraries.

The key lessons are simple:

- Go slow.

- Validate inputs.

- Handle errors gracefully.

- Keep tools updated.

Use pytrends wisely. Respect request limits. Test everything in the browser first. Once stable, your setup can run for months without issues.

And that is the magic. Clean data. Fresh trends. No headaches.

Happy scraping. 🚀